Thanks to WCAG guidelines for Audio Described (AD) videos, support for visually impaired users of films and videos is on the rise. Most of us are aware of closed captions for hearing impaired viewers. Audio Descriptions are spoken descriptions of what is happening on the screen, in addition to dialogue (example here).

Major content creators from Disney to Netflix are supporting audio described videos using separate AD audio tracks, the same way foreign language audio tracks are included as video player options. This is the ideal way to support AD videos, since it allows a user to switch back and forth seamlessly between tracks, without interrupting the flow of the video playback.

But in some cases, audio descriptions are packaged as a separate video file. Often this is due to legacy content, or due to lack of pauses or empty space in a video to speak the audio descriptions between the dialogue, at appropriate time stamps. At the time of this research, this was true of Youtube, which did not support AD automatically (since content is user-created), but allowed users to upload secondary videos, with AD included. The limitations of having a separate mp4 video file include larger file sizes, lag issues with switching between versions, and more importantly, AD video files tend to be longer, to account for the pauses and additional dialogue.

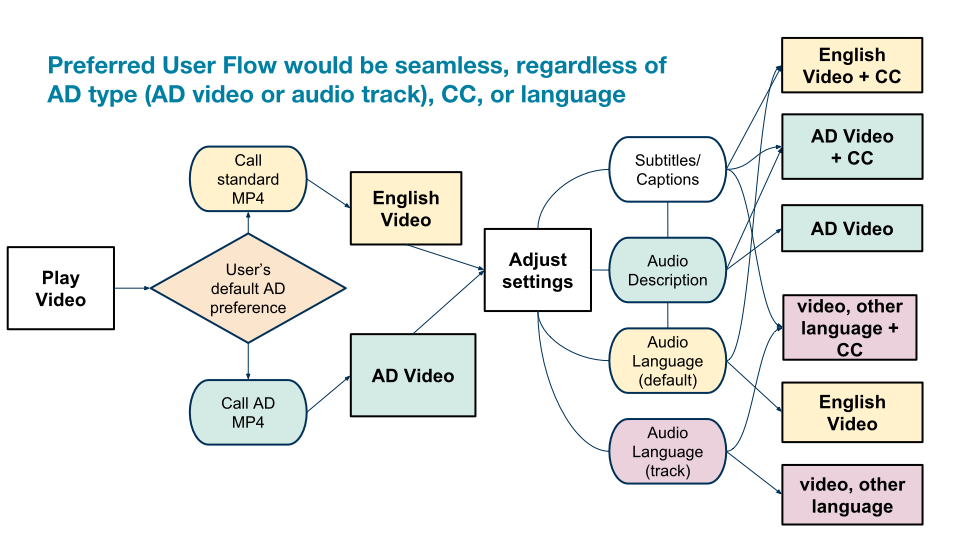

For Pearson, our content fits the second example, using audio described mp4 videos. But from a user perspective, the experience should be nearly identical, whether turning on or off any audio (default, foreign language, or audio descriptions), and whether the source file is one video + multiple audio tracks, or multiple videos. I needed to include this feature in our existing ReactJS video player UI, but wanted to scale this to also work for newer videos with AD audio tracks, and foreign language audio tracks, using the same UI.

From my interviews with our accessibility specialists, and research spike uncovering the above info, I also learned that for Android and newer Mac OS devices, audio descriptions could now be turned on by default, if available, right from the system settings. This meant that users would not need to activate AD every time the watched a new video, saving considerable time and frustration. Could we save users the extra work by activating AD videos from their preferences, while still letting them change settings in the UI later on?

I created the following flow cart for our product and dev leads:

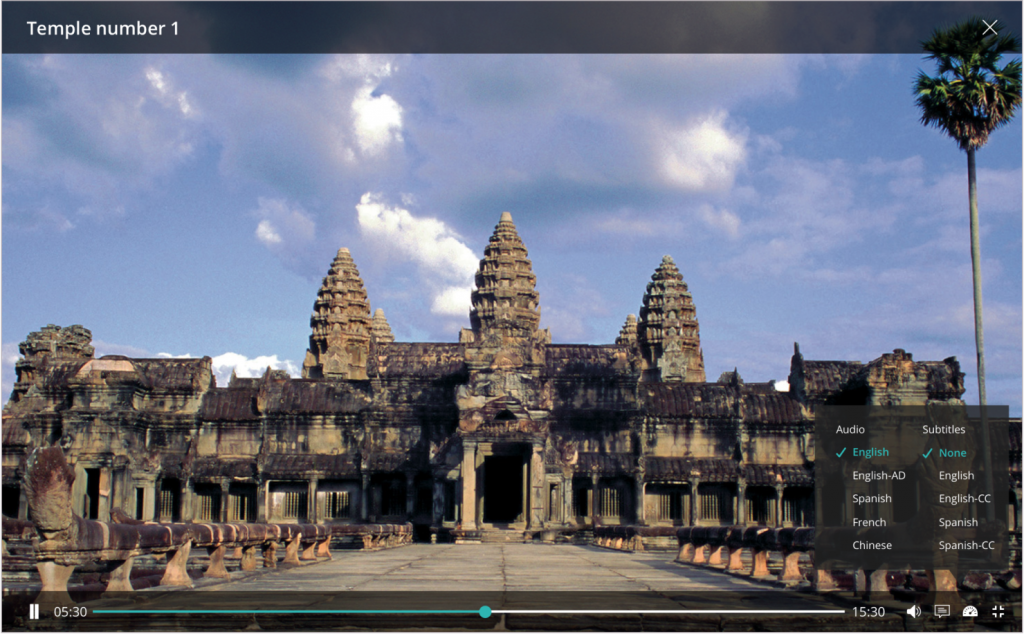

Next, for the UI, we made adjustments to Android and web, to account for the new settings. In the menu, the user would have no way of knowing if the AD file was an audio track or separate video, allowing us to support both types.

While iOS 11 supported AD videos by default in their latest native player UI, we included our custom header from in the Android version for iOS 10 and below.

We also alerted developers to update the timestamp if needed:

…an {audio described file} will likely be a new video file not an audio track. It may also be a longer runtime than the standard video, and in this case, the timestamp would need to sync, and final time would update to the AD video…